연구 소개

- 연구

- 연구 소개

Stereo Confidence Estimation via Locally Adaptive Fusion and Knowledge Distillation

- AI융합대학

- 2022-09-06

김선옥 교수의 연구실에서 발표한 논문 "Stereo Confidence Estimation via Locally Adaptive Fusion and Knowledge Distillation"이 "IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)"에 게재 승인되었다. IEEE TPAMI는 세계 최고 권위의 인공지능 학술지 중 하나로, Impact factor가 24.3점에 달한다. 본 연구는 김선옥 교수가 제 1 저자로 주도했으며, 스위스의 로잔 연방 공과대학교(EPFL), 연세대학교, 고려대학교, 이화여자대학교가 공동으로 참여하였다.

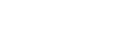

Stereo confidence estimation aims to estimate the reliability of the estimated disparity by stereo matching. Different from the previous methods that exploit the limited input modality, we present a novel method that estimates confidence map of an initial disparity by making full use of tri-modal input, including matching cost, disparity, and color image through deep networks. The proposed network, termed as Locally Adaptive Fusion Networks (LAF-Net), learns locally-varying attention and scale maps to fuse the tri-modal confidence features. Moreover, we propose a knowledge distillation framework to learn more compact confidence estimation networks as student networks. By transferring the knowledge from LAF-Net as teacher networks, the student networks that solely take as input a disparity can achieve comparable performance. To transfer more informative knowledge, we also propose a module to learn the locally-varying temperature in a softmax function. We further extend this framework to a multiview scenario. Experimental results show that LAF-Net and its variations outperform the state-of-the-art stereo confidence methods on various benchmarks.

[Figure 2] Confidence maps on KITTI 2015 dataset

-

StARformer: Transformer with State-Action-Reward Representations for Robot Learning

이유철 교수의 Robotics and AI Navigation (RAIN) 연구실에서 발표한 논문 "StARformer: Transformer with State-Action-Reward Representations for Robot Learning"이 "IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)"에 게재 승인되었다. 본 논문은 한국전자통신연구원(ETRI)과 미국 스토니브룩대학교(Stony Brook University)와 협업하여 강화 학습과 트랜스포머 모델을 이용한 종단간 모방학습 기반의 자율주행 기술에 관한 것으로, 이유철 교수는 이 논문의 교신 저자로 참여했다. EEE TPAMI는 세계 최고 권위의 인공지능 학술지 중 하나로, JCR 0.54%, Impact factor 24.314에 달한다. 본 논문은 2022년 12월에 출간될 예정이다.

2022-10-18

[Figure 1] StARformer System Architecture

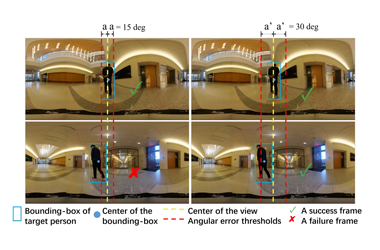

Reinforcement Learning (RL) can be considered as a sequence modeling task, where an agent employs a sequence of past state-action-reward experiences to predict a sequence of future actions. In this work, we propose St ate- A ction- R eward Transformer ( StAR former), a Transformer architecture for robot learning with image inputs, which explicitly models short-term state-action-reward representations (StAR-representations), essentially introducing a Markovian-like inductive bias to improve long-term modeling. StARformer first extracts StAR-representations using self-attending patches of image states, action, and reward tokens within a short temporal window. These StAR-representations are combined with pure image state representations, extracted as convolutional features, to perform self-attention over the whole sequence. Our experimental results show that StARformer outperforms the state-of-the-art Transformer-based method on image-based Atari and DeepMind Control Suite benchmarks, under both offline-RL and imitation learning settings. We find that models can benefit from our combination of patch-wise and convolutional image embeddings. StARformer is also more compliant with longer sequences of inputs than the baseline method. Finally, we demonstrate how StARformer can be successfully applied to a real-world robot imitation learning setting via a human-following task.

[Figure 2] Imitation Learning based Scenario Experiments to Evaluate the StARformer Navigation Performance

This study introduces StARformer, which explicitly models strong local relations (Step Transformer) to facilitate the long-term sequence modeling (Sequence Transformer) in Visual RL. Our extensive empirical results show how the learned StAR-representations help our model to outperform the baseline in both Atari and DMC environments, as well as both offline RL and imitation learning settings. We find that the fusion of learned StAR-representations and convolution features benefits action prediction. We further demonstrate that our designed architecture and token embeddings are essential to successfully model trajectories, with an emphasis on long sequences. We also verify that our method can be applied to real-world robot learning settings via a human-following experiment.

-

Event-Based Emergency Detection for Safe Drone

지승도 교수의 연구실에서 인공지능 드론의 안전 시스템을 위한 이벤트 기반 지능 제어 기법을 연구하여 발표한 논문 "Event-Based Emergency Detection for Safe Drone"이 SCI급 국제 우수 등재 저널인 "Applied Sciences" 2022년 8월 호에 게재되었다.

2022-08-21

논문 사이트로 이동

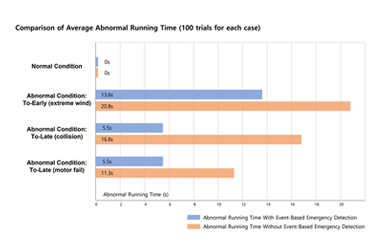

[Figure 1] Abnormal running time with / without proposed event-based emergency detection

Quadrotor drones have rapidly gained interest recently. Numerous studies are underway for the commercial use of autonomous drones, and distribution businesses especially are taking serious reviews on drone-delivery services. However, there are still many concerns about urban drone operations. The risk of failures and accidents makes it difficult to provide drone-based services in the real world with ease. There have been many studies that introduced supplementary methods to handle drone failures and emergencies. However, we discovered the limitation of the existing methods. Most approaches were improving PID-based control algorithms, which is the dominant drone-control method. This type of low-level approach lacks situation awareness and the ability to handle unexpected situations. This study introduces an event-based control methodology that takes a high-level diagnosing approach that can implement situation awareness via a time-window. While low-level controllers are left to operate drones most of the time in normal situations, our controller operates at a higher level and detects unexpected behaviors and abnormal situations of the drone. We tested our method with real-time 3D computer simulation environments and in several cases, our method was able to detect emergencies that typical PID controllers were not able to handle. We were able to verify that our approach can provide enhanced double safety and better ensure safe drone operations. We hope our discovery can possibly contribute to the advance of real-world drone services in the near future.